This episode will be part of the 2021 competition. For a detailed and up to date description please refer to the official rule book (to be released in April).

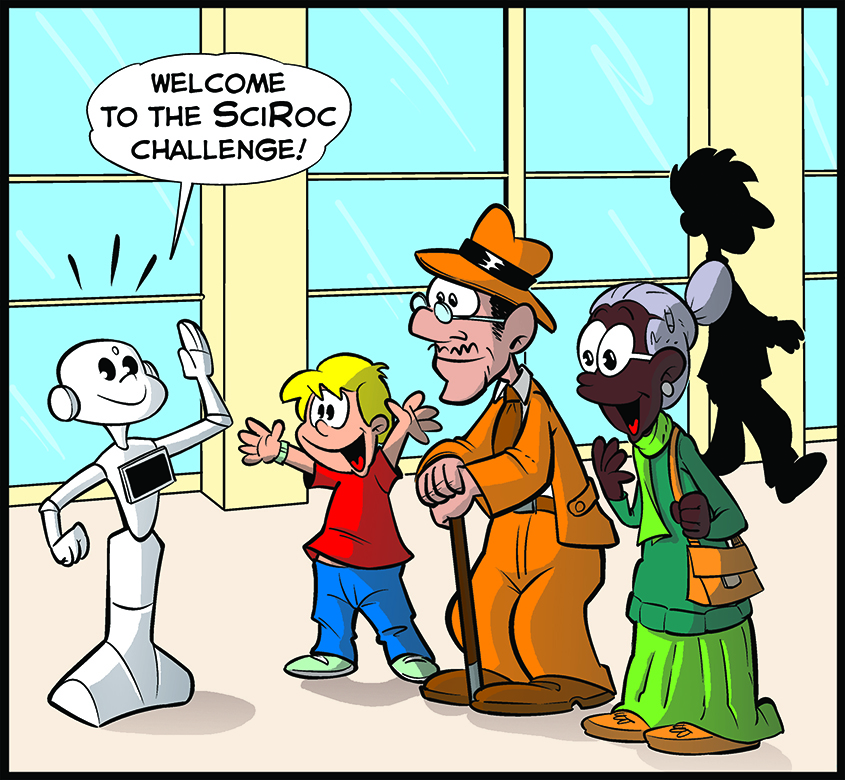

General Description.

A specific challenge for the robot operating in a coffee shop that we aim to explore is the interaction through sign languages developed by and for deaf people. The world of sign language is quite complex and there are many sign languages: being that the competition is hosted in Italy, the chosen variant is the Italian one. However, the full sign language would be out of reach; therefore, a suitable subset of the Italian Sign Language (LIS) will be devised that can support the basic interaction in the coffee shop and that can be handled by a TIAGO robot with a single arm and the hand (or any other platform with similar functionalities).

The interaction through LIS is addressed specifically by Episode 2, but it will be also an addition to the Coffee Shop Episode 1 (namely teams may implement the interaction using LIS for the Coffee Shop Episode to gain a better score). In this way, teams can enter the competition in Episode 2, focusing on the sign language only, without requiring the implementation of all the functionalities required for Episode 1.

The challenge is naturally split according to the two directions of the communication flow: the robot interpreting the sign language by humans (LIS comprehension) and the robot “speaking” in sign language (LIS production).

Platforms allowed.

Thanks to the support by PAL Robotics a TIAGO robot equipped with one arm and the hand will be available to support both on site and remote participation. Any mobile basis with one arm with a hand would be allowed to enter the competition.

Set up.

The subset of the sign language to be used in the competition is currently being defined. We are considering 20 signs and 10 phrases. Signs will be defined both for specific items to be ordered at the coffee shop and for simple sentences that support the interaction. The language definition will be delivered together with a set of videos, where the signs are played by experts.

For the sign language comprehension experts will be placed in front of the robot and the robot should classify the signs or the phrases played by the experts. Multiple rounds will be arranged with the support of multiple experts.

For the sign language production the robot will be asked to play several signs and a set of 3 experts acting as referees will evaluate the correctness and the quality of the execution, according to a pre-defined evaluation form.

<img data-attachment-id="1271" data-permalink="https://sciroc.org/e01-coffee-shop/fig-2/" data-orig-file="https://isds.kmi.open.ac.uk/isds-data/uploads/2021/03/e02-sign-language-generation-interpretation-4.jpg" data-orig-size="590,374" data-comments-opened="0" data-image-meta="{"aperture":"0","credit":"Sarah Carter","camera":"","caption":"","created_timestamp":"1616670429","copyright":"","focal_length":"0","iso":"0","shutter_speed":"0","title":"","orientation":"0"}" data-image-title="Fig. 2 One Arm TIAGO Robot with the detail of the hey5 hand" data-image-description="

One Arm TIAGO Robot with the detail of the hey5 hand

” data-medium-file=”https://isds.kmi.open.ac.uk/isds-data/uploads/2021/03/e02-sign-language-generation-interpretation-1.jpg” data-large-file=”https://isds.kmi.open.ac.uk/isds-data/uploads/2021/03/e02-sign-language-generation-interpretation-4.jpg” loading=”lazy” width=”300″ height=”190″ class=”alignnone size-medium wp-image-1271″ src=”https://isds.kmi.open.ac.uk/isds-data/uploads/2021/03/e02-sign-language-generation-interpretation-1.jpg” alt=”One Arm TIAGO Robot with the detail of the hey5 hand” srcset=”https://isds.kmi.open.ac.uk/isds-data/uploads/2021/03/e02-sign-language-generation-interpretation-1.jpg 300w, https://isds.kmi.open.ac.uk/isds-data/uploads/2021/03/e02-sign-language-generation-interpretation-4.jpg 590w” sizes=”(max-width: 300px) 100vw, 300px”>

Procedure.

According to the given description the procedure is split in two parts:

Task 1 Interpretation: The robot is placed in front of the expert; the robot should comprehend the sign language played by the expert. In each run the expert will play a series of signs/phrases. Each team will be faced with the same set of experts through repeated runs. There will be an increasing level of difficulty in the comprehension, ranging from single signs, to sequences of two signs and phrases with more than two signs.

Task 2 Generation: The robot is placed in front of the expert referees, and will be given a list of signs/phrases to be played. Also in the production task there will be an increasing level of difficulty, ranging from single signs, to sequences of two signs and phrases with more than two signs.

The challenge will run on 3 days, 2 days of preliminaries and one day of finals.The number of runs for both tasks in each day will be defined based on the number of participating teams.

Since this episode is focused on two specific functionalities the evaluation of the performance will be based on the following measures:

- The number of correctly interpreted signs.

- The score achieved in the execution of sign language instances, which is based both on quantitative and on qualitative criteria.

Scholarly Knowledge Mining

Scholarly Knowledge Mining

Digital Humanities

Digital Humanities

Data Science

Data Science

Smart Cities and Robotics

Smart Cities and Robotics